I have two identical Raspberry Pi 3B+ (RPi3B+) running OctoPrint to control my two 3D printers and provide a livestream of the connected webcams when needed. A few months ago I noticed that the "newer" of the two RPIs sporadically lost the WiFi connection after a few minutes or hours. To check if its a a hardware problem I swapped the SD cards between both PIs, but the problem moves with the SD Card, which means its a software problem. First attempts:

- Update system (dist-upgrade)

- Changes the location of the Pi to ensure that the WiFi signal is better.

- A WiFi reconnect script i used before with a Raspberry Zero W.

- Disabled Power Management with ´sudo iwconfig wlan0 power off´

I have connected a LAN cable, waited until the connection was interrupted and tried various commands to restore the connection. Unfortunately nothing helped. I found some errors in the syslog like mailbox indicates firmware halted and some GitHub issues from RaspberryPi, but no final solution:

wlan freezes in raspberry pi 3B+

PI 3B+ wifi crash, firmware halt and hangs in dongle

brcmfmac: brcmf_sdio_hostmail: mailbox indicates firmware halted

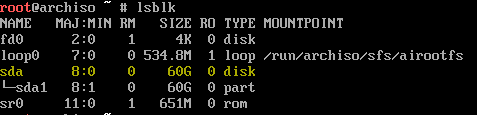

Then I continued to search for differences between the two PIs and found out that the "working Pi" had older drivers 7.45.154 that the "problem Pi", who had 7.45.229. I downgraded the firmware to 7.45.154 (/lib/firmware/brcm - my older Pi had these files) and disabled power management. Now, after some weeks of 8h printing each and enabled webcam no problems. With 7.45.229 and also disabled power management it freezes. The firmware files were the only thing I changed.

Working WiFi Firmware/Driver:

dmesg | grep brcmfmac

Firmware: BCM4345/6 wl0: Feb 27 2018 03:15:32 version 7.45.154 (r684107 CY) FWID 01-4fbe0b04

Final solution (tl;dr):

- Disabled Power Management with ´sudo iwconfig wlan0 power off´

- Downgrade drivers/firmware (brcm_7.45.154.tar to

/lib/firmware/brcm)